Enabling drones to ‘see’

Computer vision collision avoidance tested

The Cessna Skycatcher aimed for a spot in the sky 500 feet from a drone able to fly at a gross weight of up to 33 pounds, all to prove that a camera and a computer combined can make artificial “see and avoid” capability a reality.

The encounter was captured on video, a snippet of progress toward an ambitious goal: to make it possible for a drone flown beyond visual line of sight (BVLOS) to correctly identify other aircraft, and, when needed, to automatically give way. This approach could one day be deemed an artificial means of compliance with 14 CFR 91.113, the most literal version of digital see and avoid, a capability derived from a computer analyzing a live video image and differentiating aircraft from other objects.

“That’s the track we’re on,” Bailey said. “I won’t be naïve enough to say that we’re there, yet. That’s what we’re trying to build towards.”

Bailey described the origin of the effort, how CEO Alex Harmsen (who started flying lessons at 16) worked during his tenure at the NASA Jet Propulsion Laboratory on giving the Mars rovers much the same kind of computer vision capabilities that he now seeks to give to drones. Harmsen met Chief Technology Officer and co-founder James Howard, whose own résumé includes Boeing unmanned aircraft subsidiary Insitu, in the context of drone racing. They formed a team, and later built their first functional prototype in a basement.

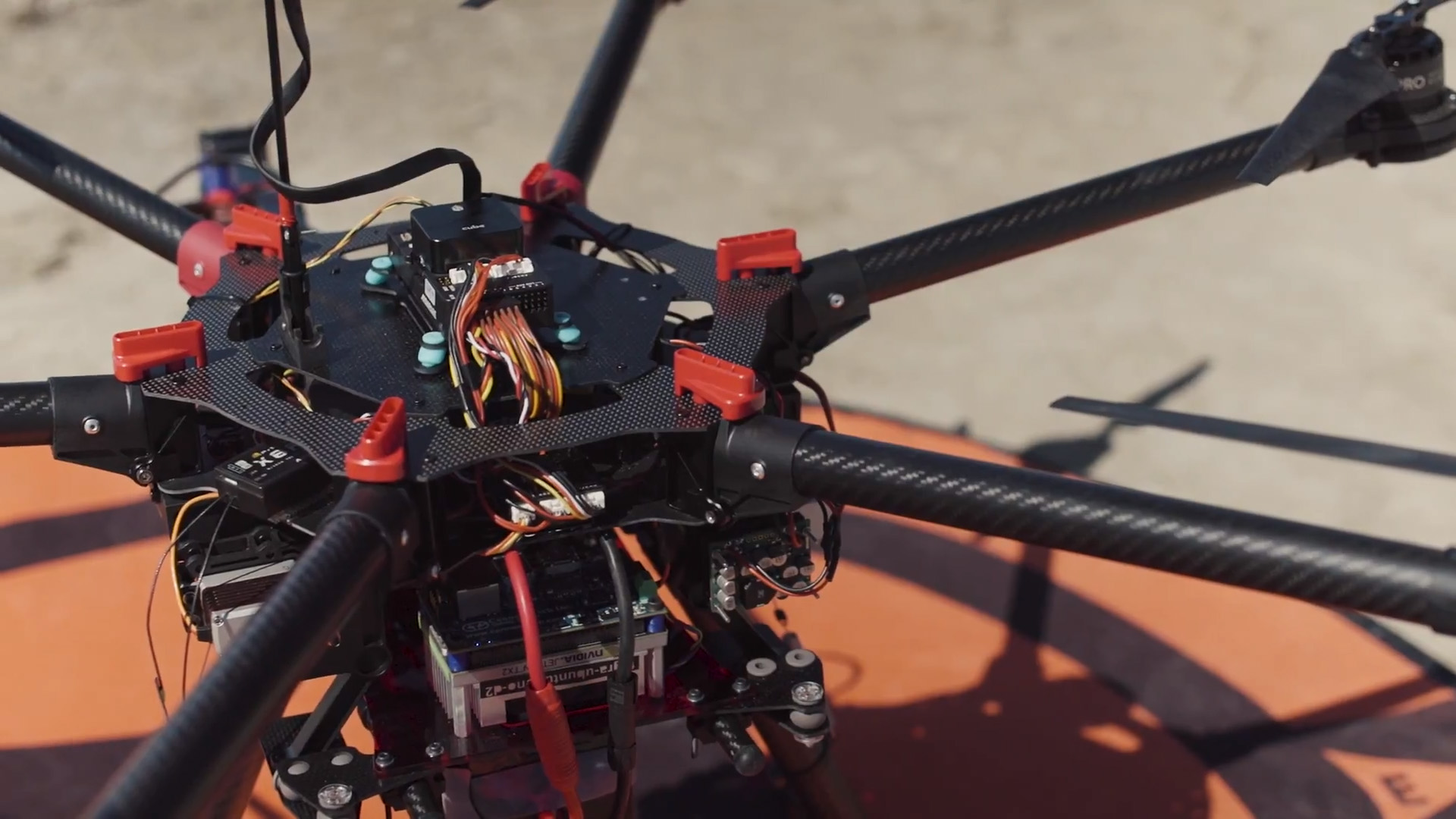

“We’ve grown the company to 22 people,” Bailey said. The system they created has so far been flown on a dozen platforms, including various DJI drones. The basic system consists of a compact computer and one forward-facing camera with a wide field of view, weighs 300 grams, and consumes 15 watts of power. Iris is logging hours and encounters, and carefully recruiting “early adopters” to mount the package (which will eventually support multiple cameras) on their own drones to add to the dataset. So far, they’ve notched many successful detections and correct identifications at ranges of about 1,600 feet. Not far enough for the system to be trusted to detect and avoid a manned aircraft approaching at high speed without human intervention, but a significant step toward standards that have yet to be defined.

ASTM International Committee F38 on Unmanned Aircraft is studying the available research and working to define the technological capability that will be required of drones of the future that will fly routinely BVLOS to deliver vaccines, blood, cheeseburgers, and shoes, to name but a few of the potential missions for drones capable of avoiding collisions and flown over long distances without human intervention.

Iris Automation is not the first to attempt creating a detect-and-avoid system based on computer vision. In 2015, a small startup company called Qelzal financed in part by a grant from the Defense Advanced Research Projects Agency, brought to InterDrone a demonstration of optical detect-and-avoid, but the Qelzal website is no longer active, and recent efforts to contact those involved with the company were unsuccessful.

The model Iris Automation has embraced includes two key components: an image sensor and a computer that can interpret the image and prompt action. The image sensor that the Iris team chose is a simple camera about as sophisticated as a years-old mobile phone camera, and the engineers envision matching more capable cameras to the computer. The tradeoffs involve increased weight and power consumption: Attaching too many high-quality cameras to the current computer would feed it too much information to process in a timely fashion. That is expected to change as computers and processors continue to get faster, more compact, and more frugal with electricity. That process is unfolding as firms large and small pursue deep learning, including Israeli tech company Hailo, which recently landed a $12 million influx of venture capital to support the creation of a new generation of computer chips made with deep learning in mind.

Competing strategies to enable drones to detect and avoid other aircraft have also landed their share of private investment. PrecisionHawk recently went public with an acoustic detection system, and another startup called Echodyne has begun selling a compact radar system that is sized for drones. Bailey said those other approaches to in-flight aircraft detection by computers may prove to be part of the ultimate solution as well.

“We consider ourselves to be a sensor fusion company,” Bailey said, adding the goal is to find and field what works, whether it’s a system based on one type of sensor or another, or a combination of all of them.

“We’re run by pilots. We have pilots on staff,” Bailey said. “We’re very open to fusing sensors and increasing situational awareness.”

The Iris team is nonetheless focused on simple cameras for now, and looking to ramp up the image processing capability over time within a small power consumption budget that could make their system cheap, effective, and able to be deployed across thousands upon thousands of drones.

“The beautiful thing about vision technology and optical technology is it’s nearly linear in terms of scalability,” Bailey said.

Iris Automation is now working with Transport Canada on BVLOS Proof of Concept trials, among the many ongoing efforts to send drones farther and farther from their home point. The company is also eager to team up with participants in similar efforts in the United States, and with virtually all participants in the Unmanned Aircraft Systems Integration Pilot Program planning to conduct BVLOS operations for purposes ranging from pest control to the delivery of defibrillators, there will likely be takers.

“We’re really looking for folks who are pushing the boundaries on what commercial and industrial drones can be used for,” Bailey said.